Protecting data privacy

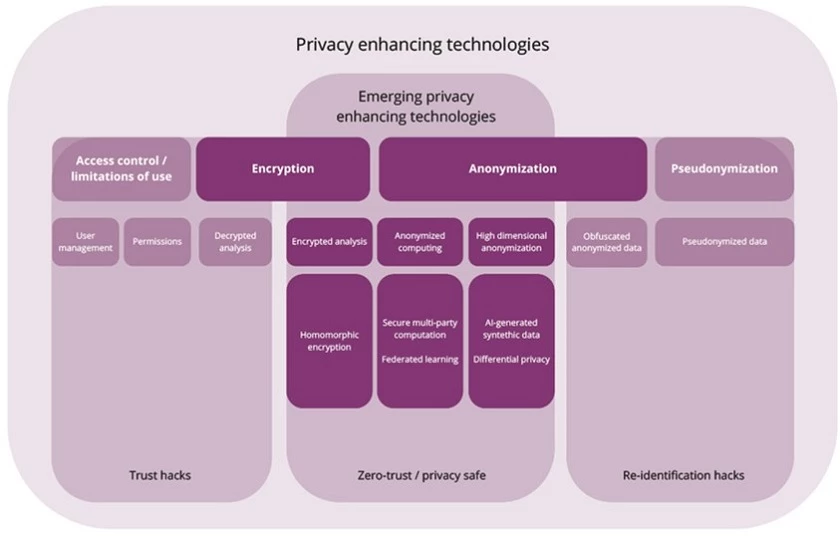

It is hoped that with the emergence and uptake of new privacy enhancing technologies, data will be able to be used whilst protecting the private individual.

Ville says: “The idea is to move into zero trust in a sense that banks can in a completely privacy protecting way, use the data for creating better analytics services and improved solutions for their customers. This at the same time as not compromising at all on the actual privacy of the customer data. It is really this interesting meeting point between access control and anonymisation techniques, kind of in between, so getting a little bit of the best of both worlds. It’s never as clear as that though of course. It’s early days for these techniques and it’s yet to be seen which one of them will become mainstream. Actually it ends up being almost always a combination of multiple things depending on what you do. It’s almost never just a single technology that is used to solve your needs.”

“The key thing is collaboration. Finding a way to protect the privacy of the customer and collaborate with other industry providers in a way that doesn’t break the competitive nature of the industry either. Some banks actually have already taken steps towards using these technologies in the areas of assessing credit risks and fighting financial crime. Banks can now make a choice. They can continue to process the data internally behind lock and key and do the best they can. However, the future is in collaboration and I think it’s up to the banks now to decide if they want to move forward with developing these new types of privacy enhancing technologies to allow those ecosystem benefits to become a reality. It’s time to start working on these things instead of doing your own internal processing to a large extent,” concludes Ville.

Mobey Forum’s AI and Data Privacy Expert Group was formed in March 2020 to address how banks and other financial institutions can strike the balance between data privacy, security and innovation in the age of Artificial Intelligence (AI). This report is the first in a two-part series. The second part is anticipated later in 2021 and will take a deeper dive into the different PETs, their variable stages of maturity, and their potential to solve some of the most urgent privacy risks that banks face today.

You can read more about the Mobey Forum here.

To discuss the themes mentioned in this article further, write to Ville at ville.sointu [at] nordea.com (ville[dot]sointu[at]nordea[dot]com).